Motivation:

- Context lengths of LLMs have been increasing exponentially, encompassing thousands of tokens as input

- Increasing context length allows for processing of lengthy external documents, enhancing memory for long conversations, search, summarization, etc.

- Effectiveness of these long-context LLMs in using the context across the length of this increasing context window is not yet properly explored

- This research aims to address the problem of whether these models can robustly access and use information located within these extended context windows, specifically when the relevant information is positioned in the middle.

- Do these models exhibit primacy bias (favoring information at the beginning of the context window) or recency bias (end of context window)?

External Context Review

No external research was needed or conducted to understand this paper

Conceptual Walkthrough:

Authors devised controlled experiments focusing on two tasks:

Multi-document Question Answering: Models receive multiple documents, only one containing the answer to a given question. Researchers systematically vary the position of the answer-containing document within the input context.

Key-Value Retrieval: A simplified task where models need to extract the value corresponding to a specific key from a JSON object. The position of the key-value pair is manipulated within the input.

Key Findings:

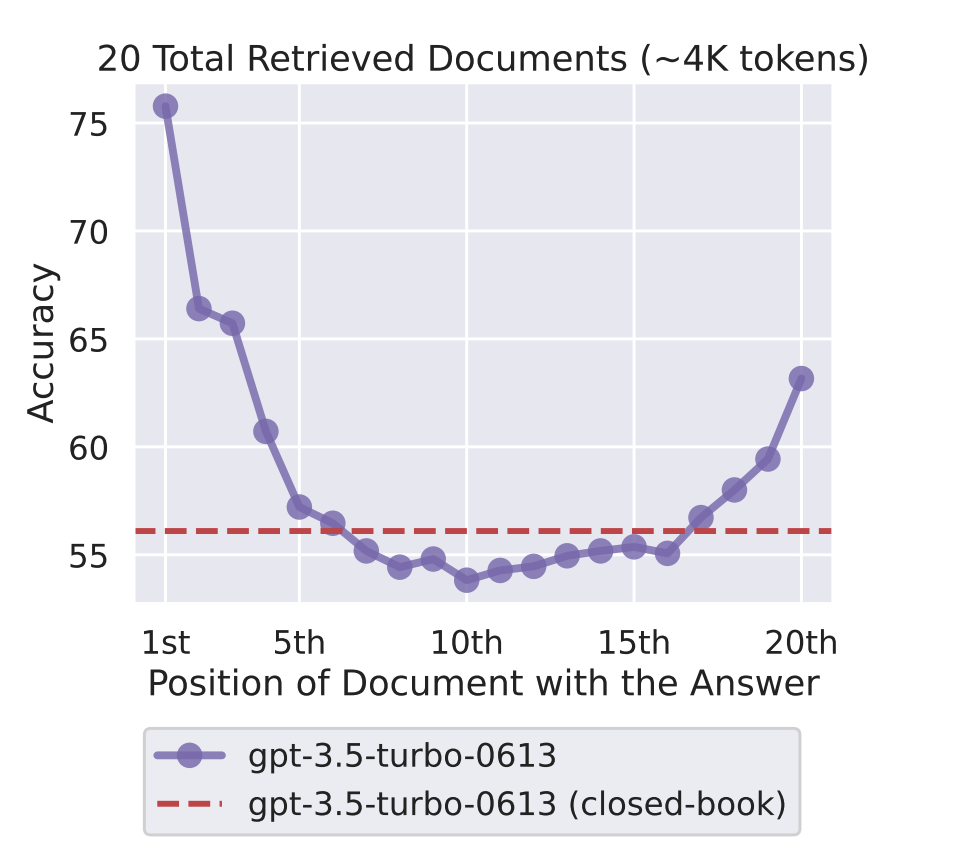

The U-shaped Performance Curve: A consistent finding across both tasks is the emergence of a U-shaped performance curve. Model accuracy is generally higher when the relevant information is at the beginning (primacy bias) or the end (recency bias) of the input context. Performance significantly deteriorates when models need to access information in the middle of the context. This pattern suggests that current long-context language models do not robustly utilize the entirety of their input context.

Extended Context Doesn’t Guarantee Better Performance: Interestingly, extended-context models (those with larger maximum context window sizes) do not consistently outperform their standard counterparts. This suggests that simply increasing the context window size might not be sufficient for improving the effective utilization of long contexts.

- Decoder-only models more vulnerable to this phenomenon, encoder-decoder models more resilient

- Comparing a base language model (MPT-30B) with its instruction-tuned counterpart (MPT-30B-instruct), both models exhibit the U-shaped curve, although the performance disparity between best and worst-case scenarios is slightly reduced

- Solutions: organize information before feeding it to model. Preprocessing input to give it structure and “roadmap”.

- Effective Reranking: position relevant information closer to the beginning of the input, leveraging observed primacy bias in the models

- Ranked List Truncation: truncate the ranked list of retrieved documents, retrieving fewer documents where appropriate

- Query–Aware Contextualization: Place query both before and after the data that is to be processed

- Understanding of Imhpact of Model Scale and Fine-Tuning: Analysis of Llama-2 models reveals that the U-shaped performance curve is more pronounced in larger language models (13B and 60B) compared to smaller models (7B)

Conclusion:

This paper examines how well language models use long input contexts and finds they struggle to access information in the middle of these contexts. This is evident in the consistent U-shaped performance curve observed across tasks, where accuracy is high when relevant information is at the beginning or end (primacy and recency bias) but drops significantly when it’s in the middle.

This has implications for real-world applications like question answering and information retrieval. The paper suggests further research on:

- Optimizing model architecture for long contexts

- Refining query-aware contextualization techniques

- Developing fine-tuning strategies to address positional biases

The research highlights the need for new evaluation methods that assess the robustness of long-context models to variations in the position of relevant information.